Deploying a Dapr Sidecar to Azure Container Instances

Containers have become one of the main, if not the main, ways to modularize, isolate, encapsulate, and package applications in the cloud. The sidecar pattern allows for taking this even further by allowing the separation of functionalities like monitoring, logging, or configuration from the business logic. This is why I recommend that the teams who are adopting containers adopt sidecars as well. One of my preferred suggestions is Dapr which can bring early value by providing abstractions for message broker integration, encryption, observability, secret management, state management, or configuration management.

To my surprise, many conversations starting around adopting sidecars quickly deviate to "we should set up a Kubernetes cluster". It's almost like there are only two options out there - you either run a standalone container or you need Kubernetes for anything more complicated. This is not the case. There are multiple ways to run containers and you should choose the one which is most suitable for your current context. Many of those options will give you more sophisticated features like sidecars or init containers while your business logic is still in a single container. Sidecars give here an additional benefit of enabling later evolution to more complex container hosting options without requirements for code changes.

In the case of Azure, such a service that enables adopting sidecars at an early stage is Azure Container Instances.

Quick Reminder - Azure Container Instances Can Host Container Groups

Azure Container Instances provides a managed approach for running containers in a serverless manner, without orchestration. What I've learned is that a common misconception is that Azure Container Instances can host only a single container. That is not exactly the truth, Azure Container Instances can host a container group (if you're using Linux containers 😉).

A container group is a collection of containers scheduled on the same host and sharing lifecycle, resources, or local network. The container group has a single public IP address, but the publicly exposed ports can forward to ports exposed on different containers. At the same time, all the containers within the group can reach each other via localhost. This is what enables the sidecar pattern.

How to create a container group? There are three options:

- With ARM/Bicep

- With Azure CLI by using YAML file

- With Azure CLI by using Docker compose file

I'll go with Bicep here. The Microsoft.ContainerInstance namespace contains only a single type which is containerGroups. This means that from ARM/Bicep perspective there is no difference if you are deploying a standalone container or a container group - there is a containers list available as part of the resource properties where you specify the containers.

resource containerGroup 'Microsoft.ContainerInstance/containerGroups@2023-05-01' = {

name: CONTAINER_GROUP

location: LOCATION

...

properties: {

sku: 'Standard'

osType: 'Linux'

...

containers: [

...

]

...

}

}

How about a specific example? I've mentioned that Dapr is one of my preferred sidecars, so I'm going to use it here.

Running Dapr in Self-Hosted Mode Within a Container Group

Dapr has several hosting options. It can be self-hosted with Docker, Podman, or without containers. It can be hosted in Kubernetes with first-class integration. It's also available as a serverless offering - part of Azure Container Apps. The option interesting us in the context of Azure Container Instances is self-hosted with Docker, but from that list, you could pick up how Dapr enables easy evolution from Azure Container Instances to Azure Container Apps, Azure Kubernetes Services or non-Azure Kubernetes clusters.

But before we will be ready to deploy the container group, we need some infrastructure around it. We should start with a resource group, container registry and managed identity.

az group create -l $LOCATION -g $RESOURCE_GROUP

az acr create -n $CONTAINER_REGISTRY -g $RESOURCE_GROUP --sku Basic

az identity create -n $MANAGED_IDENTITY -g $RESOURCE_GROUP

We will be using the managed identity for role-based access control where possible, so we should reference it as the identity of the container group in our Bicep template.

resource managedIdentity 'Microsoft.ManagedIdentity/userAssignedIdentities@2023-01-31' existing = {

name: MANAGED_IDENTITY

}

resource containerGroup 'Microsoft.ContainerInstance/containerGroups@2023-05-01' = {

...

identity: {

type: 'UserAssigned'

userAssignedIdentities: {

'${managedIdentity.id}': {}

}

}

properties: {

...

}

}

The Dapr sidecar requires a components directory. It's a folder that will contain YAML files with components definitions. To provide that folder to the Dapr sidecar container, we have to mount it as a volume. Azure Container Instances supports mounting an Azure file share as a volume, so we have to create one.

az storage account create -n $STORAGE_ACCOUNT -g $RESOURCE_GROUP --sku Standard_LRS

az storage share create -n daprcomponents --account-name $STORAGE_ACCOUNT

The created Azure file share needs to be added to the list of volumes that can be mounted by containers in the group. Sadly, the integration between Azure Container Instances and Azure file share doesn't support role-based access control, an access key has to be used.

...

resource storageAccount 'Microsoft.Storage/storageAccounts@2022-09-01' existing = {

name: STORAGE_ACCOUNT

}

resource containerGroup 'Microsoft.ContainerInstance/containerGroups@2023-05-01' = {

...

properties: {

...

volumes: [

{

name: 'daprcomponentsvolume'

azureFile: {

shareName: 'daprcomponents'

storageAccountKey: storageAccount.listKeys().keys[0].value

storageAccountName: storageAccount.name

readOnly: true

}

}

]

...

}

}

We also need to assign the AcrPull role to the managed identity so it can access the container registry.

az role assignment create --assignee $MANAGED_IDENTITY_OBJECT_ID \

--role AcrPull \

--scope "/subscriptions/$SUBSCRIPTION_ID/resourcegroups/$RESOURCE_GROUP/providers/Microsoft.ContainerRegistry/registries/$CONTAINER_REGISTRY"

I'm skipping the creation of the image for the application with the business logic, pushing it to the container registry, adding its definition to the containers list, and exposing needed ports from the container group - I want to focus on the Dapr sidecar.

In this example, I will be grabbing the daprd image from the Docker Registry.

The startup command for the sidecar is ./daprd. We need to provide a --resources-path parameter which needs to point to the path where the daprcomponentsvolume will be mounted. I'm also providing the --app-id parameter. This parameter is mostly used for service invocation (it won't be the case here and I'm not providing --app-port) but Dapr is using it also in different scenarios (for example as partition key for some state stores).

Two ports need to be exposed from this container (not publicly): 3500 is the default HTTP endpoint port and 50001 is the default gRPC endpoint port. There is an option to change both ports through configuration if they need to be taken by some other container.

resource containerGroup 'Microsoft.ContainerInstance/containerGroups@2023-05-01' = {

...

properties: {

...

containers: [

...

{

name: 'dapr-sidecar'

properties: {

image: 'daprio/daprd:1.10.9'

command: [ './daprd', '--app-id', 'APPLICATION_ID', '--resources-path', './components']

volumeMounts: [

{

name: 'daprcomponentsvolume'

mountPath: './components'

readOnly: true

}

]

ports: [

{

port: 3500

protocol: 'TCP'

}

{

port: 50001

protocol: 'TCP'

}

]

...

}

}

]

...

}

}

I've omitted the resources definition for brevity.

Now the Bicep template can be deployed.

az deployment group create -g $RESOURCE_GROUP -f container-group-with-dapr-sidecar.bicep

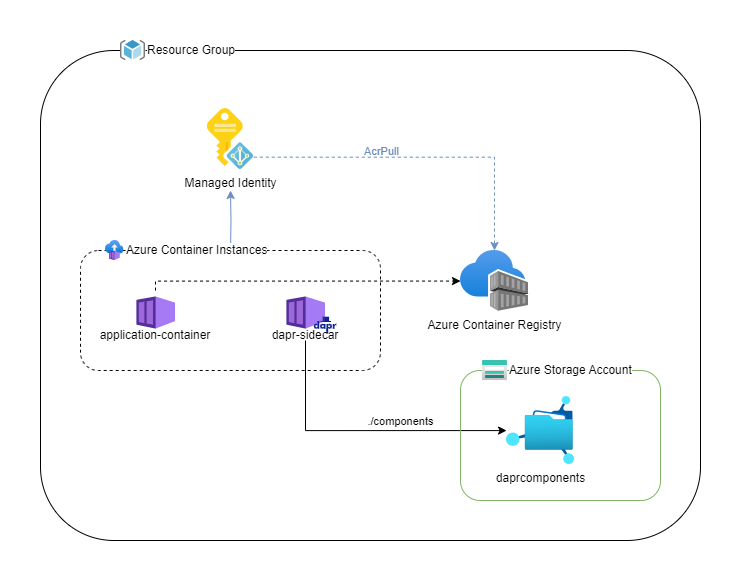

The below diagram visualizes the final state after the deployment.

Configuring a Dapr Component

We have a running Dapr sidecar, but we have yet to make it truly useful. To be able to use APIs provided by Dapr, we have to provide the mentioned earlier components definitions which will provide implementation for those APIs. As we already have a storage account as part of our infrastructure, a state store component seems like a good choice. Dapr supports quite an extensive list of stores, out of which two are based on Azure Storage: Azure Blob Storage and Azure Table Storage. Let's use the Azure Table Storage one.

First I'm going to create a table. This is not a required step, the component can do it for us, but let's assume we want to seed some data manually before the deployment.

Second, the more important operation is granting needed permissions to the storage account. Dapr has very good support for authenticating to Azure which includes managed identities and role-based access control, so I'm just going to assign the Storage Table Data Reader role to our managed identity for the scope of the storage account.

az storage table create -n $TABLE_NAME --account-name $STORAGE_ACCOUNT

az role assignment create --assignee $MANAGED_IDENTITY_OBJECT_ID \

--role "Storage Table Data Contributor" \

--scope "/subscriptions/$SUBSCRIPTION_ID/resourcegroups/$RESOURCE_GROUP/providers/Microsoft.Storage/storageAccounts/$STORAGE_ACCOUNT"

The last thing we need is the component definition. The component type we want is state.azure.tablestorage. The name is what we will be using when making calls with a Dapr client. As we are going to use managed identity for authenticating, we should provide accountName, tableName, and azureClientId as metadata. I'm additionally setting skipCreateTable because I created the table earlier and the component will fail on an attempt to create it once again.

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: state.table.<TABLE_NAME>

spec:

type: state.azure.tablestorage

version: v1

metadata:

- name: accountName

value: <STORAGE_ACCOUNT>

- name: tableName

value: <TABLE_NAME>

- name: azureClientId

value: <Client ID of MANAGED_IDENTITY>

- name: skipCreateTable

value: true

The file with the definition needs to be uploaded to the file share which is mounted as the components directory. The Azure Container Instances need to be restarted for the component to be loaded. We can quickly verify if it has been done by taking a look at logs.

time="2023-08-31T21:25:22.5325911Z"

level=info

msg="component loaded. name: state.table.<TABLE_NAME>, type: state.azure.tablestorage/v1"

app_id=APPLICATION_ID

instance=SandboxHost-638291138933285823

scope=dapr.runtime

type=log

ver=1.10.9

Now you can start managing your state with a Dapr client for your language of choice or with HTTP API if one doesn't exist.

The Power of Abstraction, Decoupling, and Flexibility

As you can see, the needed increase in complexity (when compared to a standalone container hosted in Azure Container Instances) is not that significant. At the same time, the gain is. Dapr allows us to abstract all the capabilities it provides in the form of building blocks. It also decouples the capabilities provided by building blocks from the components providing implementation. We can change Azure Table Storage to Azure Cosmos DB if it better suits our solution, or to AWS DynamoDB if we need to deploy the same application to AWS. We also now have the flexibility of evolving our solution when the time comes to use a more sophisticated container offering - we just need to take Dapr with us.