HTTP/2 Server Push and ASP.NET MVC - Cache Digest

In my previous post I didn't write much on how Server Push works with caching beside the fact that it does. This can be easily verified by looking at Network tab in Chrome Developer Tools while making subsequent requests to the sample action.

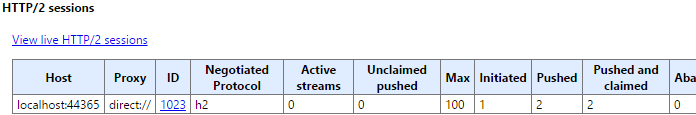

But this isn't the full picture. The chrome://net-internals/#http2 tab can be used in order to get more details. First screenshot below represents the cold scenario while second represents the warm one.

The highlighted column informs that there were resources pushed from server which haven't been used. The "View live HTTP/2 sessions" provides even more detailed debug information, down to the single HTTP/2 frames. Going through those reveals that HTTP2_SESSION_RECV_PUSH_PROMISE, HTTP2_SESSION_RECV_HEADERS and HTTP2_SESSION_RECV_DATA frames are present for both pushed resources. This shouldn't affect latency as the stream carrying HTML is supposed to have the highest priority, which means that server should start sending (and browser receiving) the data as soon as something is available (even if sending/receiving for pushed resources is not finished). On the other hand the bandwidth is being lost and loosing bandwidth often means loosing money. As nobody wants to be loosing money, this is something that needs to be resolved and Cache Digest aims at doing exactly that.

How Cache Digest solves the issue

The Cache Digest specification proposal (in its current shape) uses Golomb-Rice coded Bloom filter in order to provide server with information about the resources which are already contained by client cache. The algorithm uses URLs and ETags for hashing which ensures proper identification (and with correct ETags also freshness) of the resources. The server can query the digest and if the resource which was intended for push is present, the push can be skipped.

There is no native browser support for Cache Digest yet (at least that I know of), but there are two ways in which HTTP/2 servers (http2server, h2o) and third party libraries are trying to provide it:

- On the client side with usage of Service Workers and Cache API

- On server side by providing a cookie-based fallback

Implementing Cache Digest with Service Worker and Cache API

One of third party libraries providing Cache Digest support on client side is cache-digest-immutable. It combines Cache Digest with HTTP Immutable Responses proposal in order to avoid overly aggressive caching. This means that only resources with cache-control header containing immutable extension will be considered. In order to add such extension to pushed resource the location and customHeaders elements of web.config can be used.

<?xml version="1.0"?>

<configuration>

...

<location path="content/css/normalize.css">

<system.webServer>

<httpProtocol>

<customHeaders>

<add name="Cache-Control" value="max-age=31536000, immutable" />

</customHeaders>

</httpProtocol>

</system.webServer>

</location>

<location path="content/css/site.css">

<system.webServer>

<httpProtocol>

<customHeaders>

<add name="Cache-Control" value="max-age=31536000, immutable" />

</customHeaders>

</httpProtocol>

</system.webServer>

</location>

...

</configuration>

Quick look at responses after running the demo application shows that headers are being added properly. Now the Service Worker can be set up accordingly to the instructions. From this point the subsequent requests to the demo application will contain cache-digest header. In case of the demo application its value is CdR2gA; complete. The part before the ; is the digest (Base64 encoded) while the rest contains flags.

The flags can be parsed easily by checking if corresponding constants are present in the header.

var compareInfo = CultureInfo.InvariantCulture.CompareInfo;

var compareOptions = CompareOptions.IgnoreCase;

bool reset = (compareInfo.IndexOf(cacheDigestHeaderValue, "RESET", compareOptions) >= 0);

bool complete = (compareInfo.IndexOf(cacheDigestHeaderValue, "COMPLETE", compareOptions) >= 0);

bool validators = (compareInfo.IndexOf(cacheDigestHeaderValue, "VALIDATORS", compareOptions) >= 0);

bool stale = (compareInfo.IndexOf(cacheDigestHeaderValue, "STALE", compareOptions) >= 0);

My first attempt to decode the digest has failed miserably with an awful exception. The reason is that the padding is being truncated and it must be added back.

int separatorIndex = cacheDigestHeaderValue.IndexOf(DigestValueSeparator);

string digestValueBase64 = cacheDigestHeaderValue.Substring(0, separatorIndex);

int neededPadding = (digestValueBase64.Length % 4);

if (neededPadding > 0)

{

digestValueBase64 += new string('=', 4 - neededPadding);

}

byte[] digestValue = Convert.FromBase64String(digestValueBase64);

Now the digest query algorithm can be implemented. The algorithm assumes going through digest value bit by bit so first step was changing array of bytes into array of bools.

bool[] bitArray = new bool[digestValue.Length * 8];

int bitArrayIndex = bitArray.Length - 1;

for (int byteIndex = digestValue.Length - 1; byteIndex >= 0; byteIndex --)

{

byte digestValueByte = digestValue[byteIndex];

for (int byteBitIndex = 0; byteBitIndex < 8; byteBitIndex++)

{

bitArray[bitArrayIndex--] = ((digestValueByte % 2 == 0) ? false : true);

digestValueByte = (byte)(digestValueByte >> 1);

}

}

The algorithm also requires reading a series of bits as integer in few places, below method takes care of that.

private static uint ReadUInt32FromBitArray(bool[] bitArray, int starIndex, int length)

{

uint result = 0;

for (int bitIndex = starIndex; bitIndex < (starIndex + length); bitIndex++)

{

result <<= 1;

if (bitArray[bitIndex])

{

result |= 1;

}

}

return result;

}

With this method count of URLs and probability can be easily retrieved.

// Read the first 5 bits of digest-value as an integer;

// let N be two raised to the power of that value.

int count = (int)Math.Pow(2, ReadUInt32FromBitArray(digestValueBitArray, 0, 5));

// Read the next 5 bits of digest-value as an integer;

// let P be two raised to the power of that value.

int log2Probability = (int)ReadUInt32FromBitArray(digestValueBitArray, 5, 5);

uint probability = (uint)Math.Pow(2, log2Probability);

The part which reads hashes requires keeping in mind that there might be additional 0 bits at the end, so there is a risk of going over the array without proper checks. I've decided to keep the hashes in HashSet for quicker lookups later.

HashSet hashes = new HashSet();

// Let C be -1.

long hash = -1;

int hashesBitIndex = 10;

while (hashesBitIndex < bitArray.Length)

{

// Read ‘0’ bits until a ‘1’ bit is found; let Q bit the number of ‘0’ bits.

uint q = 0;

while ((hashesBitIndex < bitArray.Length) && !bitArray[hashesBitIndex])

{

q++;

hashesBitIndex++;

}

if ((hashesBitIndex + log2Probability) < bitArray.Length)

{

// Discard the ‘1’.

hashesBitIndex++;

// Read log2(P) bits after the ‘1’ as an integer. Let R be its value.

uint r = ReadUInt32FromBitArray(bitArray, hashesBitIndex, log2Probability);

// Let D be Q * P + R.

uint d = (q * probability) + r;

// Increment C by D + 1.

hash = hash + d + 1;

hashes.Add((uint)hash);

hashesBitIndex += log2Probability;

}

}

Last thing which is needed to query the digest value is the ability to calculate a hash of given URL (and optionally ETag). The important thing here is that DataView used by Service Worker in order to convert SHA-256 to integer is internally using Big-endian byte order so the implementation must accommodate for that.

// URL should be properly percent-encoded (RFC3986).

string key = url;

// If validators is true and ETag is not null.

if (validators && !String.IsNullOrWhiteSpace(entityTag))

{

// Append ETag to key.

key += entityTag;

}

// Let hash-value be the SHA-256 message digest (RFC6234) of key, expressed as an integer.

byte[] hash = new SHA256Managed().ComputeHash(Encoding.UTF8.GetBytes(key));

uint hashValue = BitConverter.ToUInt32(hash, 0);

if (BitConverter.IsLittleEndian)

{

hashValue = (hashValue & 0x000000FFU) << 24 | (hashValue & 0x0000FF00U) << 8

| (hashValue & 0x00FF0000U) >> 8 | (hashValue & 0xFF000000U) >> 24;

}

// Truncate hash-value to log2(N*P) bits.

int hashValueLength = (int)Math.Log(count * probability, 2);

hashValue = (hashValue >> (_hashValueLengthUpperBound - hashValueLength))

& (uint)((1 << hashValueLength) - 1);

I've combined all this logic into CacheDigestHashAlgorithm, CacheDigestValue and CacheDigestHeaderValue classes, which I've used in PushPromiseAttribute in order to conditionally push resources.

[AttributeUsage(AttributeTargets.Class | AttributeTargets.Method, ...)]

public class PushPromiseAttribute : FilterAttribute, IActionFilter

{

...

public void OnActionExecuting(ActionExecutingContext filterContext)

{

...

HttpRequestBase request = filterContext.HttpContext.Request;

CacheDigestHeaderValue cacheDigest = (request.Headers["Cache-Digest"] != null) ?

new CacheDigestHeaderValue(request.Headers["Cache-Digest"]) : null;

IEnumerable pushPromiseContentPaths = _pushPromiseTable.GetPushPromiseContentPaths(

filterContext.ActionDescriptor.ControllerDescriptor.ControllerName,

filterContext.ActionDescriptor.ActionName);

foreach (string pushPromiseContentPath in pushPromiseContentPaths)

{

string pushPromiseContentUrl = GetAbsolutePushPromiseContentUrl(

filterContext.RequestContext,

pushPromiseContentPath);

if (!(cacheDigest?.QueryDigest(pushPromiseContentUrl) ?? false))

{

filterContext.HttpContext.Response.PushPromise(pushPromiseContentPath);

}

}

}

}

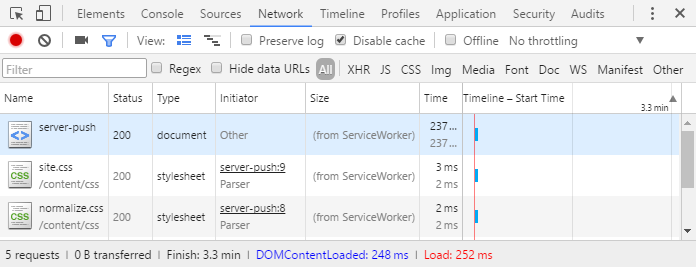

The easiest way to verify the functionality is to debug the demo application or check "View live HTTP/2 sessions" in chrome://net-internals/#http2 tab. After initial request the Service Worker will take over fetching of the resources by adding cache-digest header to the subsequent requests and serving cached resources with help of Cache API. On the server side the cache-digest header will be picked up and push properly skipped.

Implementing Cache Digest with cookie-based fallback

The idea behind the cookie-based fallback is very simple - the server generates the cache digest by itself and passes it between requests as a cookie. Important difference is that only the digest value can be stored (as cookies doesn't allow semicolon and white spaces in values) so server needs to assume the flags. The algorithm for generating the digest value is similar to the one for reading it, so I'll skip it here (for interested ones it is splited into CacheDigestValue.FromUrls and CacheDigestValue.ToByteArray methods) and move on to changes in PushPromiseAttribute.

[AttributeUsage(AttributeTargets.Class | AttributeTargets.Method, ...)]

public class PushPromiseAttribute : FilterAttribute, IActionFilter

{

...

public bool UseCookieBasedCacheDigest { get; set; }

public uint CacheDigestProbability { get; set; }

...

public void OnActionExecuting(ActionExecutingContext filterContext)

{

...

HttpRequestBase request = filterContext.HttpContext.Request;

CacheDigestHeaderValue cacheDigest = null;

if (UseCookieBasedCacheDigest)

{

cacheDigest = (request.Cookies["Cache-Digest"] != null) ?

new CacheDigestHeaderValue(

CacheDigestValue.FromBase64String(request.Cookies["Cache-Digest"].Value)) : null;

}

else

{

cacheDigest = (request.Headers["Cache-Digest"] != null) ?

new CacheDigestHeaderValue(request.Headers["Cache-Digest"]) : null;

}

IDictionary cacheDigestUrls = new Dictionary();

IEnumerable pushPromiseContentPaths = _pushPromiseTable.GetPushPromiseContentPaths(

filterContext.ActionDescriptor.ControllerDescriptor.ControllerName,

filterContext.ActionDescriptor.ActionName);

foreach (string pushPromiseContentPath in pushPromiseContentPaths)

{

string pushPromiseContentUrl = GetAbsolutePushPromiseContentUrl(

filterContext.RequestContext,

pushPromiseContentPath);

if (!(cacheDigest?.QueryDigest(absolutePushPromiseContentUrl) ?? false))

{

filterContext.HttpContext.Response.PushPromise(pushPromiseContentPath);

}

cacheDigestUrls.Add(absolutePushPromiseContentUrl, null);

}

if (UseCookieBasedCacheDigest)

{

HttpCookie cacheDigestCookie = new HttpCookie("Cache-Digest")

{

Value = CacheDigestValue.FromUrls(cacheDigestUrls, CacheDigestProbability)

.ToBase64String(),

Expires = DateTime.Now.AddYears(1)

};

filterContext.HttpContext.Response.Cookies.Set(cacheDigestCookie);

}

}

}

There shouldn't be nothing surprising here, but the code exposes couple of limitations when cookie-based fallback is being used.

First of all there is no way to recreate the full list of pushed resources from the digest value. The code above creates new cookie value during every request which is good enough if we always attempt to push the same resources, but if we needs to push something different for a specific action we will loose the information about resources which are not related to that action. One possible optimization here would be to store in digest only resources which are supposed to be pushed almost always and in case of actions which require smaller subset keep the information that all the needed resources were already in the digest (which means that value doesn't have to be changed). Another approach would be to keep a registry of every resource pushed for given session or client, but with such detailed registry using additionally a cookie becomes questionable.

The other thing are checks for resources freshness. The above implementation works well if names can be used for freshness control (for example they contain versions) but in other cases we will not know that a resource has expired. In such scenarios the validators flag should be set to true and ETags provided.

Final thoughts

The Cache Digest seems to be the missing piece which allows fine tuning of HTTP/2 Server Push. There is a number of open issues around the proposal so things might change, but the idea is already being adopted by some of the Web Servers with HTTP/2 support. When the proposal will start closing to its final shape hopefully browsers will start providing native support.